- Improved Time Dependency Modeling in Emotion Recognition: Advanced LSTM

Long short-term memory (LSTM) layers are the building blocks of recurrent neural networks (RNN) and are used to facilitate the application of RNNs in sequential modeling tasks, such as machine translation. Due to layer inputs, the LSTM layer assumes that the state of its current layer (as stored in the memory cell) is dependent on the state of the same layer at the previous time point. This one-step time dependency restricts the modeling capability of temporal information and represents a major constraint of LSTM layers in RNNs.

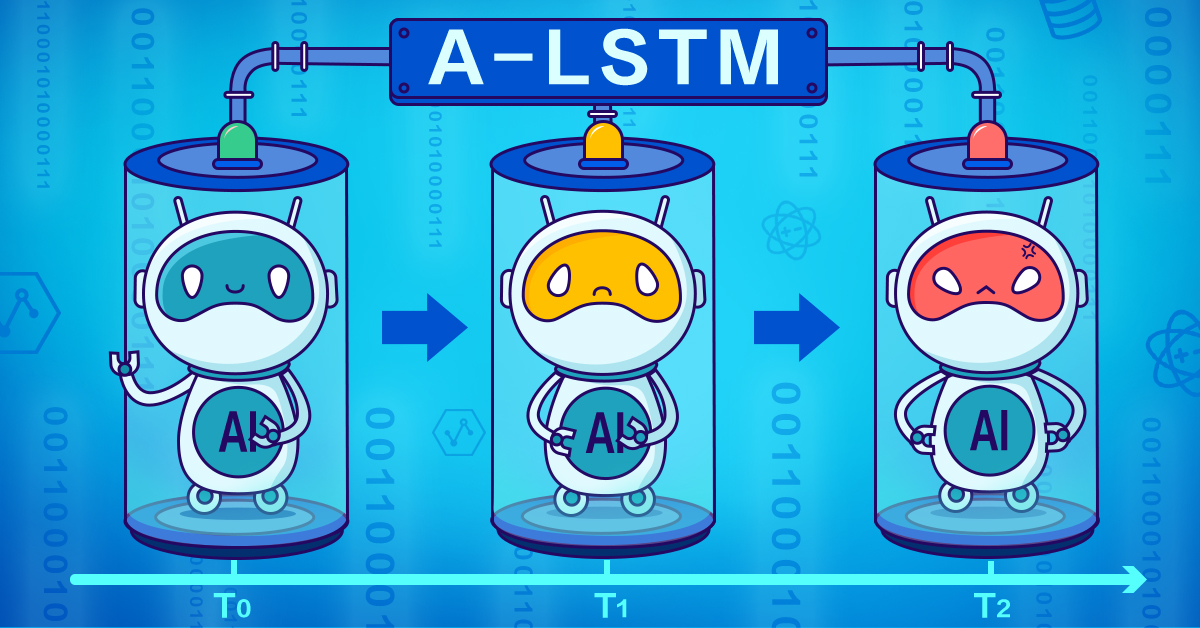

In this paper, researchers from the Alibaba tech team and The University of Texas at Dallas propose a new variant of LSTM that they call advanced LSTM (A-LSTM), to reduce emphasis on the assumption of one-step time dependency. A-LSTM is able to incorporate cell states at several different time points using linear combination, which is computed using an attention mechanism where the weights of data points are learned. This data-driven framework flexibly learns time dependency, as shown in the figure below.

Through a study of emotion recognition, the research team show that their A-LSTM outperforms the original LSTM approach. Moreover, the improvement is primarily the result of the new time-dependency computation mechanisms which verifies the success of Alibaba’s solution to address existing problems with the original LSTM.

Read the full paper here.

. . .

Alibaba Tech

First hand, detailed, and in-depth information about Alibaba’s latest technology → Search “Alibaba Tech” on Facebook